I have a couple of test VMs on my home network. These boxes are behind my NAT router which has port forwarding options these are both Linux and Windows servers. Since we did several integrations lately, we needed our test taddm server to scan these VMs. The taddm server has full internet access so it can connect to any device that is accessible on the internet. The following steps we performed to scan these devices:

– Dedicate a linux based box as anchor in the internal network.

– Change the ssh port to a custom high port ( we used 9901 ) that will be allowed though by my internet provider.

– Forward the selected port ( we used 9901 ) and the default anchor port 8497 to the dedicated linux box on the NAT router.

– Dedicate a Windows based box to be the windows gateway in the internal network, this is required as we have windows servers that we would like to scan.

– Follow this procedure to install Cygwin SSH server on that windows box. Leave the SSH port on default ( 22 )

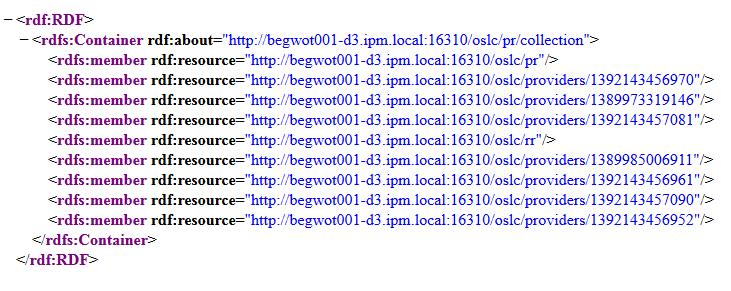

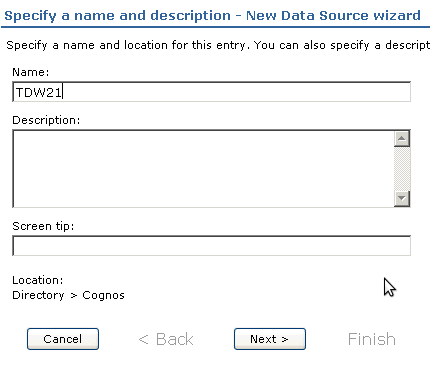

– Create a scope for the internal ip range we named the scope Scope_internal_ip and added range 192.168.1.0-192.168.1.255 since this is the range we are using internally on our local network.

– Create a scope for the anchor box which only has its the ip of the router defined. We named this scope to Scope_Anchor.

– Taddm has the option to change the default SSH port for any scope you specify. To achieve this we add new lines the collation.properties file ( usually location under ./taddm/dist/etc ). Add the name of the scope after com.collation.SshPort option like com.collation.SshPort.Scope_internal_ip. You can define a different SSH port for each scope you have. Also please make sure that you keep the original setting for port 22. The port 9901 has to be added to the com.collation.pingagent.ports setting as well as taddm only pings for ports listed in this value. This is not set in collation.properties by default will have to be added once a non-standard port is in play.

Add/change the following in collation properties:

#=========================

# SSH Settings

#=========================

# Default port to use for all ssh connections

com.collation.SshPort=22

# This sets SSH to port 9901 for scope internal_ip

com.collation.SshPort.Scope_internal_ip=9901

#This sets ping agent to use port 9901 as well for checking availability or the target.

com.collation.pingagent.ports=22,135,9901

– Create an anchor using the Discovery Management Console. We use the ip address of our router here and select to use internal_ip scope.

– Create a windows gateway using the Discovery Management Console. Use the internal ip address of the windows server. In our case this was 192.168.1.7

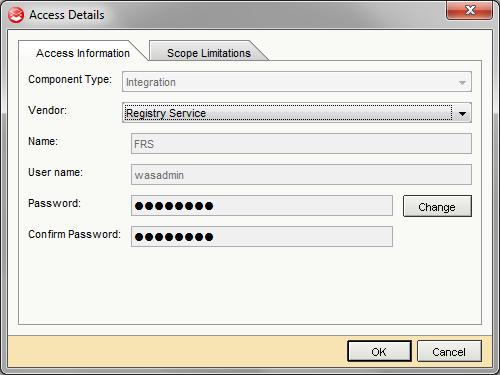

– Create access lists for each id involved, we used the following ids: root for the linux anchor, Administrator for the windows gateway, and taddm for the rest of the windows boxes on the internal network. So user Administrator and taddm has Scope_internal_ip as the scope limitation and root has Scope_Anchor as the scope limitation.

Now the environment is all set up, initiate a New Discover from the Discovery Management Console, select the 2 newly created scopes run the scan. This should install the anchor on your dedicated linux box, identify and install the windows gateway, scan the internal network and scan all boxes having taddm id active.